What’s happening “under the hood” with ChatGPT and the Wolfram plugin? Remember that the core of ChatGPT is a “large language model” (LLM) that’s trained from the web, etc. to generate a “reasonable continuation” from any text it’s given. But as a final part of its training ChatGPT is also taught how to “hold conversations”, and when to “ask something to someone else”—where that “someone” might be a human, or, for that matter, a plugin. And in particular, it’s been taught when to reach out to the Wolfram plugin.

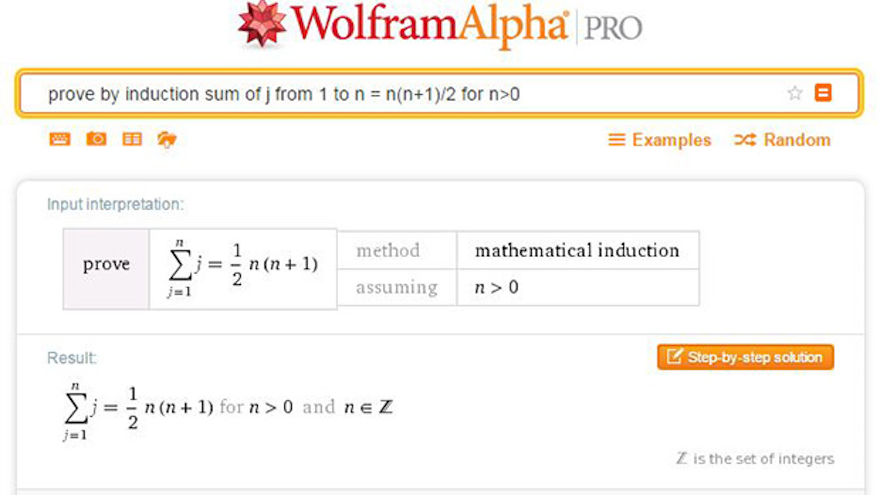

The Wolfram plugin actually has two entry points: a Wolfram|Alpha one and a Wolfram Language one. The Wolfram|Alpha one is in a sense the “easier” for ChatGPT to deal with; the Wolfram Language one is ultimately the more powerful. The reason the Wolfram|Alpha one is easier is that what it takes as input is just natural language—which is exactly what ChatGPT routinely deals with. And, more than that, Wolfram|Alpha is built to be forgiving—and in effect to deal with “typical human-like input”, more or less however messy that may be.

Wolfram Language, on the other hand, is set up to be precise and well defined—and capable of being used to build arbitrarily sophisticated towers of computation. Inside Wolfram|Alpha, what it’s doing is to translate natural language to precise Wolfram Language. In effect it’s catching the “imprecise natural language” and “funneling it” into precise Wolfram Language.

When ChatGPT calls the Wolfram plugin it often just feeds natural language to Wolfram|Alpha. But ChatGPT has by this point learned a certain amount about writing Wolfram Language itself. And in the end, as we’ll discuss later, that’s a more flexible and powerful way to communicate. But it doesn’t work unless the Wolfram Language code is exactly right. To get it to that point is partly a matter of training. But there’s another thing too: given some candidate code, the Wolfram plugin can run it, and if the results are obviously wrong (like they generate lots of errors), ChatGPT can attempt to fix it, and try running it again. (More elaborately, ChatGPT can try to generate tests to run, and change the code if they fail.)

There’s more to be developed here, but already one sometimes sees ChatGPT go back and forth multiple times. It might be rewriting its Wolfram|Alpha query (say simplifying it by taking out irrelevant parts), or it might be deciding to switch between Wolfram|Alpha and Wolfram Language, or it might be rewriting its Wolfram Language code. Telling it how to do these things is a matter for the initial “plugin prompt”.

And writing this prompt is a strange activity—perhaps our first serious experience of trying to “communicate with an alien intelligence”. Of course it helps that the “alien intelligence” has been trained with a vast corpus of human-written text. So, for example, it knows English (a bit like all those corny science fiction aliens…). And we can tell it things like “If the user input is in a language other than English, translate to English and send an appropriate query to Wolfram|Alpha, then provide your response in the language of the original input.”

Sometimes we’ve found we have to be quite insistent (note the all caps): “When writing Wolfram Language code, NEVER use snake case for variable names; ALWAYS use camel case for variable names.” And even with that insistence, ChatGPT will still sometimes do the wrong thing. The whole process of “prompt engineering” feels a bit like animal wrangling: you’re trying to get ChatGPT to do what you want, but it’s hard to know just what it will take to achieve that.

Eventually this will presumably be handled in training or in the prompt, but as of right now, ChatGPT sometimes doesn’t know when the Wolfram plugin can help. For example, ChatGPT guesses that this is supposed to be a DNA sequence, but (at least in this session) doesn’t immediately think the Wolfram plugin can do anything with it:

ChatGPT and Wolfram Language—A New Era of Human-AI Collaboration

ChatGPT has brought a surprising level of sophistication to the world of communication, with its ability to take a rough draft and transform it into a polished piece, be it an essay, letter, or legal document. Historically, we might have resorted to using template blocks, modifying and assembling them to meet our needs. However, ChatGPT has revolutionized this approach by absorbing a wealth of such templates from the internet, and now adeptly adapts them to meet specific requirements.

Does this magic extend to code? Traditional coding is often fraught with repetitive “boilerplate work”. In fact, a lot of programming involves copying large chunks of code from the internet. ChatGPT, however, seems to be upending this practice by efficiently generating boilerplate code, given just a small amount of human guidance.

Certainly, some human input is required — without it, ChatGPT wouldn’t know what program to write. But this raises an interesting question: why should code require boilerplate at all? Couldn’t we have a language that only necessitates a minimal human input, without the need for boilerplate?

This is where traditional programming languages hit a roadblock, as they are typically designed to instruct a computer in the computer’s language: set this variable, test that condition, and so on. But, what if we could flip the narrative? What if we could start from human concepts, and then computationally represent these, effectively automating their implementation on a computer?

This is precisely what I’ve been working on for over four decades, leading to the creation of the Wolfram Language—a full-scale computational language. This means that the language contains computational representations for abstract and real-world concepts, from graphs, images, and differential equations to cities, chemicals, and companies.

You might wonder, why not start with natural language? It works to some extent, as evidenced by the success of Wolfram|Alpha. But as soon as we want to specify something more complex, natural language becomes cumbersome, and we need a more structured method of expression.

Historically, this can be seen in the development of mathematics. Prior to around 500 years ago, math was essentially expressed in natural language. Then mathematical notation was invented, giving rise to algebra, calculus, and an array of mathematical sciences.

With Wolfram Language, the aim is to provide a computational language that can represent anything that can be expressed computationally. To achieve this, we’ve needed to create a language that not only does a lot of things automatically but also inherently understands a lot of things. The outcome is a language designed for humans to express themselves computationally, much like mathematical notation allows mathematical expression. Importantly, unlike traditional programming languages, Wolfram Language is meant to be readable by humans, serving as a structured means of communicating computational ideas to humans and computers alike.

The advent of ChatGPT makes this even more crucial. As we’ve seen, ChatGPT can work with Wolfram Language, building computational ideas using natural language. The ability of Wolfram Language to directly represent the concepts we wish to discuss is vital. Equally important is the fact that it provides a way for us to “understand what we have” as we can feasibly and efficiently read Wolfram Language code that ChatGPT has generated.

RayGam AI Produced Videos

RayGam AI Produced Videos